Description:

Photorealistic Earth Orbit Simulator

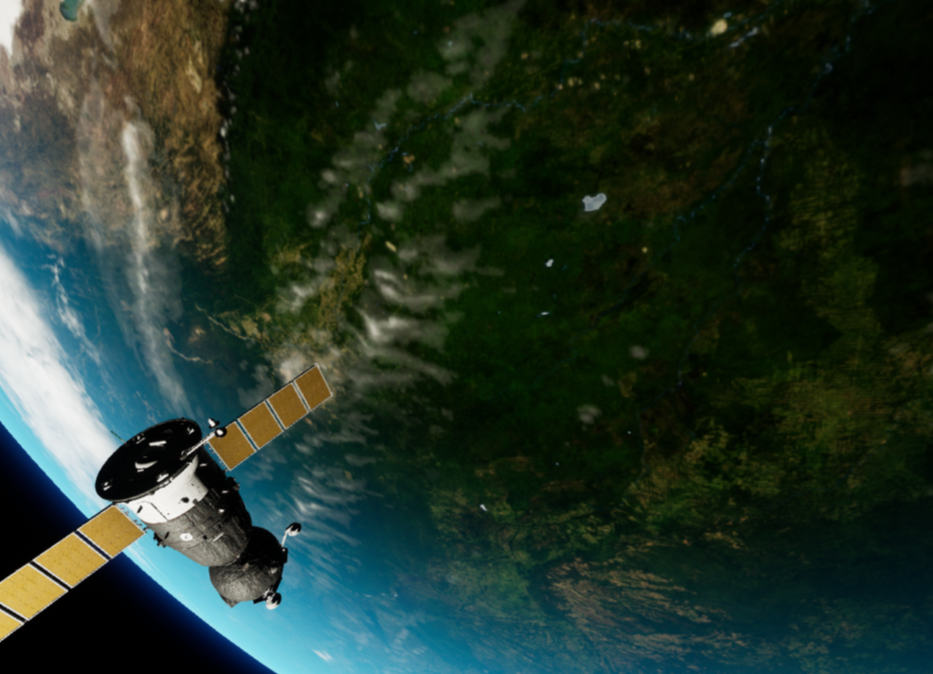

Example of frames synthesized by URSO of a soyuz model

Example of frames synthesized by URSO of a soyuz model

Benefits:

- Improved close-proximity position and attitude estimation

- URSO datasets and the ESA pose estimation challenge. In this competition, our best model achieved 3rd place on the synthetic test set and 2nd place on the real test set

- models learned on URSO datasets can perform on real images from space

- Reduced risk inflight

- Reduced costs

- Reduced data requirement

Applications:

GNC: guidance, navigation and control,

Rendezvous Docking (RVD)

Formation flying, rendezvous, docking, servicing and space debris removal.

https://www.youtube.com/watch?v=43xnAn9dFSE

https://www.youtube.com/watch?v=MaCsE6m5PDQ

Technology:

A visual simulator built on Unreal Engine 4 of on-orbit spacecrafts to generate photorealistic datasets to learn and benchmark visual perception algorithms for spacecraft detection, pose and trajectory estimation. State-of-the-art vision algorithms are based on deep learning. However deep learning requires a vast amount of data, which is prohibitive or costly in space. The main challenge is to transfer successfully models learned on synthetic data to real space operations.

Opportunity:

Licencing of Software at TRL 5-6

Simulator for dataset generation, validation and benchmarking of visual perception in Earth orbit. The simulator builds on Unreal engine and contains a python interface and toolkit and features an earth model with NASA Blue Marble HR textures, atmosphere scattering, lens artefacts (flare, bloom), spacecraft with physically based materials and ground truth (object pose, depth maps and masks).

Industry - The simulator and dataset generation need to be tailored to the particular mission plan so that the simulated data represents the expected observed working environment and camera parameters.

Contact:

Will Mortimore Technology Transfer Manager

Tel: +44 (0)1483 68 4909 w.mortimore@surrey.ac.uk

Inventor:

Professor Yang Gao, Associate Dean (International) and Professor of Space Autonomous Systems at the University of Surrey

Prestigious 2019 Mulan Award for her contribution to science, technology and engineering.

Professor Yang Gao is the Associate Dean (International) for Faculty of Engineering and Physical Sciences (FEPS) and the Professor of Space Autonomous Systems at Surrey Space Centre (SSC). She is also the Head of the STAR LAB which specializes in robotic sensing, perception, visual GNC and biomimetic mechanisms for industrial applications in the extreme environments. She brings nearly 20 years of research experience in developing robotics and autonomous systems (RAS), in which she has been Principle Investigator of internationally teamed projects funded by UK Research Innovation (EPSRC/STFC/InnovateUK), Royal Academy of Engineering, European Commission, European Space Agency, UK Space Agency, as well as industrial companies such as Airbus, NEPTEC, Sellafield and OHB. Yang is also actively involved in design and development of real-world space missions like ESA's ExoMars, Proba3 and LUCE-ice mapper, UK's MoonLITE/Moonraker, and China's Chang'E3,

Keywords: Space, spacecraft, satellite, software, simulator, photorealistic, image, deep-learning, visual perception, ground truth (object pose, depth maps and masks). 3D sensing and perception, Gao, Yang Prof (Surrey Space Centre),GNC: guidance, navigation and control, Rendezvous Docking (RVD)